I’m a fan of agile practices in programming, and encourage my team to work along agile principles as much as possible. One thing that has always been tricky about that for us, however, is that we don’t really match the usual profile of a software team.

Most agile teams (at least in the literature) are focused on a single project, and have multiple people working together to get that project done. We, on the other hand, do all the development work for Texas State University’s Learning Management System (about 32,000 users), Content Management System (287 sites at current count), an Event Calendar system, the University iPhone app, a reservation system for training classes, a custom web content caching system, various custom-built content management systems for accreditation and regulatory compliance, and a bevy of internal tools — all with 6 people.

Needless to say, I’m very proud of my team.

Prioritizing the time of six people when we have ongoing responsibility for more than twice that many projects is, however, a daunting challenge. Our approach for a number of years had been to set release milestones for each project, to do release planning meetings to determine what should go into a given release based on our Planning Poker estimates, and then to try our best to get the work done in time.

This approach had a few problems:

- Release planning meetings were long and boring. We would walk through the list of unresolved tasks for a given project one by one to see if anyone wanted to prioritize that task. 90% of our time was spent saying “Ticket #666: add a green widget to the defrobulator. Anyone interested? Anyone? Class? Bueller?” (Only to have Bueller pipe up three tickets later: “Can we go back to ticket #666 for a minute?”)

- It was easy for people who had an interest in one project to commit 100% of the development team’s time to that project, while folks who were keen on another project would commit all of our time to that project as well. Our planning process didn’t reflect the fact that all the projects were competing for the same resources.

- If we finished all of the tickets for a release early and had extra time (a pretty rare problem, admittedly), we didn’t know what to work on next.

So a few months back, I decided to try an experiment. I got the stakeholders for all of our projects in a room together, gave them each 10 pennies, and explained to them the rules of the game:

“Today you are going to help the development team set our priorities. You each have 10 pennies, which represent tasks you can vote for. In order to vote for a particular task, write it on an index card, put that card in the middle of the table, and plunk down as many of your pennies as you’d like on that card. You can use all of your pennies on one task, or spread them among as many tasks as you like. Also, I encourage respectful argument. Try your hardest to persuade the people around you that they should put their pennies on the tasks you like as well. When we’re done, the number of pennies on a task will help determine its priority for our team.”

Good natured chaos ensued for the next 30 minutes as we wrote cards, passed them around, combined them, discussed the relative merits of the tasks ahead, bumped into each other as we moved around the table, tried to figure out what the heck some of the cards meant, and generally made a mess of the conference room we were working in. When we were done, we had a big, unruly pile of index cards with big, unruly piles of pennies on each:

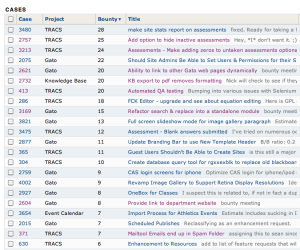

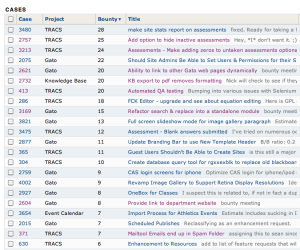

After the meeting’s conclusion, the dev team took another 15 minutes to count the pennies on each card and put that count into a special “Bounty” field in our ticketing system, creating new tickets as needed. (We use “bounty” and “pennies” interchangeably.) When I was done, I told the system to sort our tickets by bounty, and suddenly had a prioritized list, across all of our projects, of what tasks had the most business value. Beautiful!

Task List Sorted by Bounty

Of course, the number of pennies on a given ticket doesn’t directly determine the order in which we work on things. We also factor in the estimates for a given task we’ve come up with individually or during our Planning Poker sessions. One can divide the pennies by the estimated hours to get a “bang for the buck” rating for each of the tickets — a much more useful way to prioritize one’s work.

I don’t like to assign tasks to people on my team directly more than necessary. I find people to generally enjoy work much more when they are able to make their own decisions about what to spend their time on. On the other hand, I do want us as a team to provide the most real value we possibly can to our various customers. Since the penny game provided us a “here are tasks with business value” list, I decided to provide a couple of incentives for folks who were completing those tasks:

- I took a bizarre southwestern style pot that I had sitting around, labeled it the “Pot of Honor”, and told the team that it would be filled with candy and awarded to the team member each week who managed to complete tasks worth the most pennies during that span. Battling for the dubious honor of having this homely artifact rest on one’s desk for the week provides some silly, low-level competition and recognition for individuals.

- I set the team a collective, cumulative goal and told them I’d take them to lunch when they reached it. When we tally pennies for the awarding of the Pot of Honor, we also add up the number of pennies the whole team has completed and add them to a running total. Progress is noted on our work-area whiteboard, so we can all see how close we are to getting to have free food.

The Pot of Honor

I’ve also made space on the whiteboard in our team area where we have our daily stand up meetings to put up the “Top 10” list of tasks that have accumulated the most pennies to help maintain focus on those high-value items.

The next time we played the penny game, a month later, it went much more quickly: we already had cards on the table from the last meeting, everyone had a better idea of what we were doing, and some folks had looked through the tickets in advance to see which tasks they wanted to support. I was surprised to see that, as we got more practiced, we were able to finish the entire exercise in about 20 minutes. We simply added the new pennies to the existing bounties in the ticketing system, making them increasingly juicy as they got older and people still had interest in them.

We’ve been playing the game for a number of months now, and I count our experiment a solid success. Advantages it has provided for us:

- Prioritization is way more fun and engaging. It also goes considerably faster than all of our individual release planning sessions did.

- The development team always has a clear idea of what our business needs are, and which of our tasks will provide the most value.

- Stakeholders cannot say “everything is top priority”, but are forced to choose where to allocate their pennies. The finite scarcity of pennies reflects the limits on developer time.

- Individual developers can exercise a good deal of autonomy and choose their own tasks while we still, as a team, deliver on the things we need to.

- There are a number of tasks that, while people say they are important, apparently do not merit the expenditure of a penny. As these persist for a sufficient period of time without accumulating any pennies, we can close them as not really being a high priority. (We can always reopen them later if someone decides to spend a penny to raise them from the dead.)

We’ve introduced a few refinements as we’ve gone along: Because some of our systems have tens of thousands of users, it’s ill-advised to get all of the stakeholders in a room at once. To account for this, we give the support staff extra pennies to distribute as proxies for the absent users. We’ve started writing project names and ticket numbers on the index cards to make it easier to correlate them to our ticket system. We’ve begun bringing as many laptops and iPads as possible to the meetings so that people can see the details on the various tickets in question. We now add a penny to each task whenever a user request comes into our support team.

I should note that during the time I was designing this process, I was also reading through Total Engagement, and consciously built in many of their 10 Ingredients for Games: Feedback across a range of time scales (completion of individual tickets, weekly discussion of bounties cleared, longer-term goal of team lunch), Reputation (the awarding of the Pot of Honor), Marketplaces and Economies (the game itself is a market to “buy” the development team’s time), Competition Under Rules that are Explicit and Enforced (there’s no ambiguity about how many pennies are on a ticket or how they’re assigned), Teams (working toward the common lunch goal), and Time Pressure (weekly tallies of points, implicit time pressure of not wanting to be the last person with pennies left to spend while everyone else sits around). I think these elements have been critical in making this a more engaging way for us to do things.

So, if you’re facing a similar challenge with more projects than you have people, feel free to swipe any of these ideas that look helpful. I hope they’re of some use to you. If you do give the penny game a try, please post here and let me know how it goes. Good luck!